What is Oracle RAC interconnect

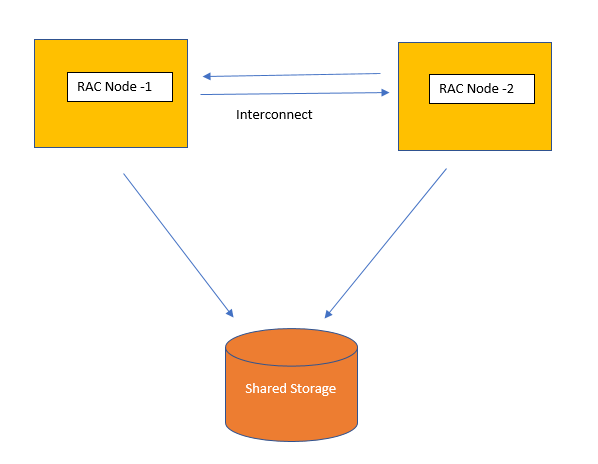

The RAC interconnect is a very important part of the cluster environment it is on of the aorta’s of a cluster environment. The interconnect is used as physical layer between the cluster nodes to perform heartbeats as well as the cache fusion is using it. The interconnect must be a private connection. Cross over cable is not supported

In a day to day operation it is proven that when the interconnect is configured correctly the interconnect will not be the bottleneck in case of performance issues. In the rest of this article will be focus on the how to validate the interconnect is really used. An Oracle DBA must be able to validate the interconnect settings in case of performance problems. Out of scope is the physical attachment of the interconnect.

Although you should treat performance issues in a Cluster environment the way you would normally also do in no-cluster environments here some area’s you can focus on. Normally the average interconnect latency using gigabit must be < 5ms. Latency around 2ms are normal.

How to find Oracle RAC interconnect information

(1) Using the dynamic view gv$cluster_interconnects:

SQL> select * from gv$cluster_interconnects;

INST_ID NAME IP_ADDRESS IS_ SOURCE

1 en12 192.158.100.65 NO Oracle Cluster Repository

2 en12 192.158.100.66 NO Oracle Cluster Repository

(2) Using the clusterware command oifcfg:

$oifcfg getif

en10 10.127.149.0 global public

en11 10.127.150.0 global public

en12 192.158.100.64 global cluster_interconnect

(3) Using oradebug ipc:

sqlplus “/ as sysdba”

SQL>oradebug setmypid

Statement processed.

SQL>oradebug ipc

Information written to trace file.

The above command would dump a trace to user_dump_dest. The last few lines of the trace would indicate the IP of the cluster interconnect. Below is a sample output of those lines.

(4) Information in /etc/hosts

#RAC Cluster 1

#

192.158.100.65 Node1-priv

192.158.100.66 Node2-priv

10.127.149.15 Node1

10.127.149.136 Node2

Best Practices for Oracle RAC interconnect

(1) For the private network 10 Gigabit Ethernet is highly recommended, the minimum requirement is 1 Gigabit Ethernet.

(2) To avoid the public network or the private interconnect network from being a single point of failure, Oracle highly recommends configuring a redundant set of public network interface cards (NIC’s) and private interconnect NIC’s on each cluster node. Starting with 11.2.0.2 Oracle Grid Infrastructure can provide redundancy and load balancing for the private interconnect (NOT the public network), this is the preferred method of NIC redundancy for full 11.2.0.2 stacks (11.2.0.2 Database must be used).

(3) The use of a switch (or redundant switches) is required for the private network (crossover cables are NOT supported).

Dedicated redundant switches are highly recommended for the private interconnect due to the fact that deploying the private interconnect on a switch (even when using a VLAN) may expose the interconnect links to congestion and instability in the larger IP network topology. If deploying the interconnect on a VLAN, there should be a 1:1 mapping of VLAN to non-routable subnet and the VLAN should not span multiple VLANs (tagged) or multiple switches. Deployment concerns in this environment include Spanning Tree loops when the larger IP network topology changes, Asymmetric routing that may cause packet flooding, and lack of fine grained monitoring of the VLAN/port.

(4)Consider using Infiniband on the interconnect for workloads that have high volume requirements. Infiniband can also improve performance by lowering latency. When Infiniband is in place the RDS protocol can be used to further reduce latency. Exadata and Supercluster is configured with Infiniband only and we see good performance on those boxes

(5) Starting with 12.1.0.1 IPv6 is supported for the Public Network, IPv4 must be used for the Private Network. Please see the Oracle Database IPv6 State of Direction white paper for details.

(6) Jumbo Frames for the private interconnect is a recommended best practice for enhanced performance of cache fusion operations

Related ArticlesOracle Real Application Clusters( Oracle RAC)

Oracle Clusterware?

Oracle Flex Cluster 12c

Oracle Cluster command

How to setup diag wait in cluster

why-database-not-startup-automatic in 11gR2 cluster